Note: This 2020 post was updated in 2024, some things have changed.

Generators are related to coroutines...and I thought I knew what they were  . But looking at examples of Python coroutines made me wonder exactly what the difference was, and what (if anything) they could do that yielders + wrapping functions could not.

. But looking at examples of Python coroutines made me wonder exactly what the difference was, and what (if anything) they could do that yielders + wrapping functions could not.

So I thought I'd try translating a coroutine example.

Python3 Example

A top hit for Python coroutines is apparently https://www.geeksforgeeks.org/coroutine-in-python/. I now take it this is out of date, and Python3 looks to have a much more JavaScript/C# async/await type feel to it.

# Python3 program for demonstrating

# coroutine chaining

def producer(sentence, next_coroutine):

'''

Producer which just split strings and

feed it to pattern_filter coroutine

'''

tokens = sentence.split(" ")

for token in tokens:

next_coroutine.send(token)

next_coroutine.close()

def pattern_filter(pattern="ing", next_coroutine=None):

'''

Search for pattern in received token

and if pattern got matched, send it to

print_token() coroutine for printing

'''

print("Searching for {}".format(pattern))

try:

while True:

token = (yield)

if pattern in token:

next_coroutine.send(token)

except GeneratorExit:

print("Done with filtering!!")

next_coroutine.close()

def print_token():

'''

Act as a sink, simply print the

received tokens

'''

print("I'm sink, i'll print tokens")

try:

while True:

token = (yield)

print(token)

except GeneratorExit:

print("Done with printing!")

pt = print_token()

pt.__next__()

pf = pattern_filter(next_coroutine = pt)

pf.__next__()

sentence = "Bob is running behind a fast moving car"

producer(sentence, pf)`

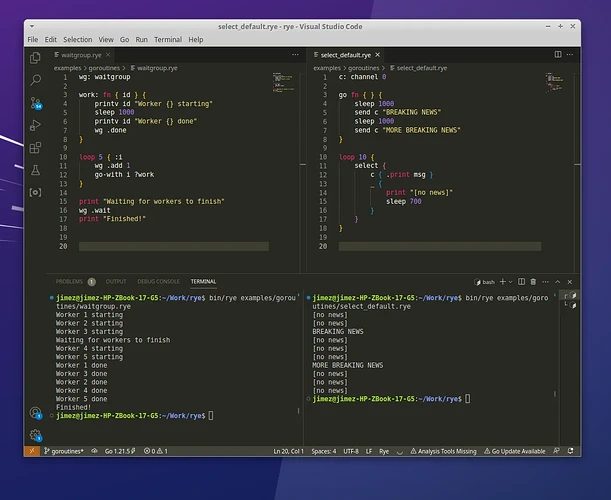

(This does look like it's leading toward async/await, which as I mentioned is what the Python3 stuff looks like. As I shuffle around how this kind of producer/consumer pattern might be managed more legibly, I feel a resonance with the writeup of "What Color is Your Function". That author's conclusion seems like mine and maybe others which is that Go's channels and "goroutines" are probably a better answer than trying to emulate these other more bizarre coroutine examples.)

Ren-C Version of That Example

Here is what I put together, without changing names (just adding a couple of comments):

/producer: func [sentence [text!] next-coroutine [action?]] [

let tokens: split sentence space

for-each 'token tokens [

next-coroutine token ; Python says `next_coroutine.send(token)`

]

next-coroutine null ; Python says `next_coroutine.close()`

]

/pattern-filter: func [next-coroutine [action?] :pattern [text!]] [

pattern: default ["ing"]

return yielder [token [~null~ text!]] [

print ["Searching for" pattern]

while [token] [ ; Python does a blocking `token = (yield)`

if find token pattern [

next-coroutine token

]

yield ~ ; yield to producer, so it can call w/another token

]

print "Done with filtering!!"

next-coroutine null

yield:final ~

]

]

/emit-token: yielder [token [~null~ text!]] [

print ["I'm sink, I'll print tokens"]

while [token] [ ; Python does a blocking `token = (yield)`

print token

yield ~ ; yield to pattern-filter, so it can call w/another token

]

print "Done with printing!"

yield:final ~

]

/et: emit-token/

/pf: pattern-filter et/

sentence: "Bob is running behind a fast moving car"

producer sentence pf/

The output matches the example.

What's Different?

They're similar, though I think the Ren-C version is more elegant (modulo the YIELD:FINAL, which I think we may want to fix, but I'll discuss that in a minute.)

You'll notice that a key difference is that Python uses YIELD as a method of getting input.

while True:

token = (yield)

if pattern in token:

next_coroutine.send(token)

This strikes me as... weird. The idea is that you "send" the generators what they will experience as the return value of their yield.

With a Ren-C yielder, you "send" it the argument just by calling it as a function...and that can be any number of arguments, received normally in the frame. Then the YIELD just gives you the opportunity to return a value to that call (which we don't need in this case).

It makes a lot more sense, because here you just think of it as a function that the next time you call it, will still be in the same state. YIELD is just morally equivalent to RETURN.

What's the YIELD:FINAL for?

So this is related to the idea that when you make a call to a generator and the body finishes, that typically means it has no generated value to give back... so it gives you a definitional raised error, that's the end signal. This is part of enabling a full-band generator result.

But when you're calling a coroutine as a value sink and not a value source, you don't want the last call you make to the sink to return a raised error. Because then you'd have to suppress the error at the callsite.

This does point to something a bit odd about YIELDER, which is that since you're passing them values, they are either not going to ever run out of values (and are just processing what you give them in a loop), or one of the values you pass them is a "close yourself" signal...and here we're using NULL. If there's more than one parameter, this means your close signal would have to have some kind of dummy values to those parameters.

I think this probably means that there needs to be a way to send this close signal without passing parameters. close next-coroutine/ may or may not be a good syntax for it.

But, then we have to ask what kind of a clean exception model this would give. One idea could be that if it's in a suspended state, then when it becomes unsuspended, then instead of returning the yielded value, it would return a raised error. If you needed to handle being closed out from under yourself, then reacting to that exception would be the place to do it.

However...if you didn't have any exception handling on your YIELD, then the CLOSE would result in an abrupt failure. Basically, you can only CLOSE yielders that are prepared to receive the message.

Subtle. Anyway, this wasn't a super huge priority in 2020...and it still isn't here in 2024... but it's interesting to look at, and keep in mind.