UTF8-Everywhere has been running along relatively well...even without any optimization on ASCII strings.

But there's a next level of bugaboo to worry about, and that's unicode normalization. This is where certain codepoint sequences are considered to make the same "glyph"...e.g. there can be a single accented form of a character with an accent, or a two-codepoint sequence that is the unaccented character and the codepoint for an accent.

Since there's more than one form for the codepoints, one can ask what form you canonize them to. You can either try to get them to the fewest codepoints (for the smallest file to transmit) or the most (to make it easier to process them as their decomposed parts).

On the plus side... unicode provides a documented standard and instructions for how to do these normalizations. The Julia language has what seems to be a nicely factored implementation in C with few dependencies, called "utf8proc" It should not be hard to incorporate that.

On the minus side... pretty much everything about dealing with unicode normalization.  There have been cases of bugs where filenames got normalized but then were not recognized as the same filename by the filesystem with the shuffled codepoints...despite looking the same visually. So you can wind up with data loss if you're not careful about where and when you do these normalizations.

There have been cases of bugs where filenames got normalized but then were not recognized as the same filename by the filesystem with the shuffled codepoints...despite looking the same visually. So you can wind up with data loss if you're not careful about where and when you do these normalizations.

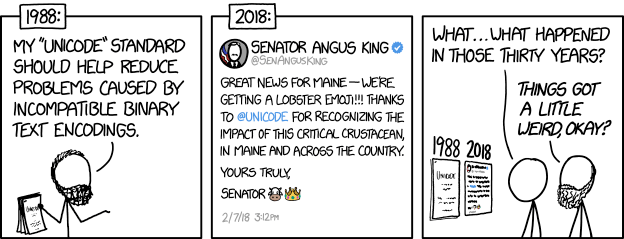

This could get arbitrarily weird, especially with WORD! lookups. Consider the kinds of things that can happen historically:

>> o: make object! [LetTer: "A"]

>> find words-of o 'letter

== [LetTer]

The casing has to match, but also be preserved...and this means you could get a wacky casing you weren't expecting pretty easily. Now add unicode normalization (I haven't even mentioned case folding).

- Do we preserve different un-normalized versions as distinct synonyms?

- When you AS TEXT! convert a WORD! does the sequence of codepoints vary from one same-looking word to another?

- Are conversions between TEXT! <=> WORD! guaranteed not to change the bytes?

It's pretty crazy stuff. I'm tempted to say this is another instance where making a strong bet could be a win. For instance: say that all TEXT! must use the minimal canon forms--and if your file isn't in that format, you must convert it or process it as a BINARY!.

Or there could also be an alternative type, maybe something like UTF8!...which supported the full codepoint range?

Anyway...this stuff is the kind of thing you can't opt-out of making a decision on. I'm gathering there should probably be a conservative mode that lets you avoid potential damage from emoji and wild characters altogether, and then these modes relax with certain settings based on what kind of data you're dealing with.

The only standard that seems to exist sets a limit of 30, arbitrarily!

The only standard that seems to exist sets a limit of 30, arbitrarily!